Best Practices for Data Set Cleaning Before Analysis

Data is the backbone of business decision-making processes, influencing every aspect, from strategic planning to customer engagement. However, due to the huge data volume across all channels and methods, many businesses face issues like inaccuracy, irrelevancy, and outdatedness in their databases. In such cases, it is important to frame data-cleaning strategies.

The implications of having outdated or irrelevant data in your CRM system are serious. It can hamper your business decisions and even lead to monetary losses. This is where data cleaning, or data scrubbing techniques, become indispensable. Data cleaning refers to the process of identifying, correcting, and removing errors, inconsistencies, and inaccuracies from data sets to ensure their accuracy, completeness, and consistency. It is a critical step in the data analysis workflow, laying the foundation for robust and reliable insights. This blog will help you know the importance of having clean data sets, along with the best practices for cleaning your data sets before analysis.

Why Is it Important to Have Clean Data Sets?

Clean data sets are essential for numerous reasons, each contributing to the effectiveness and reliability of data-driven decision-making processes.

1. Ensuring Accurate Analysis

Accurate data is the backbone of any reliable analysis. Clean data sets eliminate errors and inconsistencies that can skew results, ensuring that conclusions are based on precise and trustworthy information.

2. Enhancing Decision-Making

Decision-makers rely on data to guide strategic choices. Clean data sets provide a solid foundation for these decisions, allowing leaders to act confidently and base their strategies on solid evidence rather than assumptions.

3. Increasing Operational Efficiency

Clean data reduces the need for extensive manual corrections and rework. It further saves time and resources, allowing teams to focus on more critical tasks rather than troubleshooting data issues.

4. Facilitating Compliance

Many industries are governed by strict data regulations. Clean data sets help organizations meet compliance requirements, avoiding legal issues and potential fines associated with inaccurate or mishandled data. For example, if a financial firm is not updated with clean data, it may show the wrong numbers and transactions, such as higher or lower taxable revenue. This can lead to over payment of tax and other legal fines if found by the authorities.

5. Improving Customer Satisfaction

Accurate data is crucial for understanding customer needs and behaviors. Clean data sets ensure marketing strategies, customer service responses, and product developments are based on real insights, leading to higher customer satisfaction and loyalty.

Best Practices for Data Set Cleaning Before Analysis

1. Have Clear Analysis Goals

Before beginning the data cleaning process, it’s vital to define your analytical goals clearly. Understand the specific questions you aim to answer and the insights you seek. This clarity helps identify which data sets are relevant and which can be disregarded. Defining your goals also guides the cleaning process, ensuring that it aligns with your end objectives and prevents unnecessary data handling. Knowing your goals will help you prioritize data cleaning tasks, focusing on areas that directly impact your analysis.

2. Maintain High-Quality Benchmarks

Setting up high-quality benchmarks is essential to maintain the integrity of your data. Establish criteria that data must meet to be considered high-quality data that should also align with your requirements. These benchmarks will serve as a reference point during the cleaning process, helping you identify and rectify deviations. Implementing quality benchmarks ensures that only reliable data is used in analysis, enhancing the validity of your results. It also aids in developing a systematic approach to continuously evaluate and improve data quality.

3. Remove Duplicate Entries

Duplicate entries can significantly skew analysis results, leading to incorrect conclusions. Identifying and removing these duplicates is essential to maintain data integrity. Use automated software tools to detect and eliminate duplicate records efficiently. Ensure each data point is unique to avoid inflating the data set or misrepresenting the information. Removing duplicates helps streamline your data, making it more manageable and accurate for analysis. This step is crucial in large data sets where duplicates can easily go unnoticed.

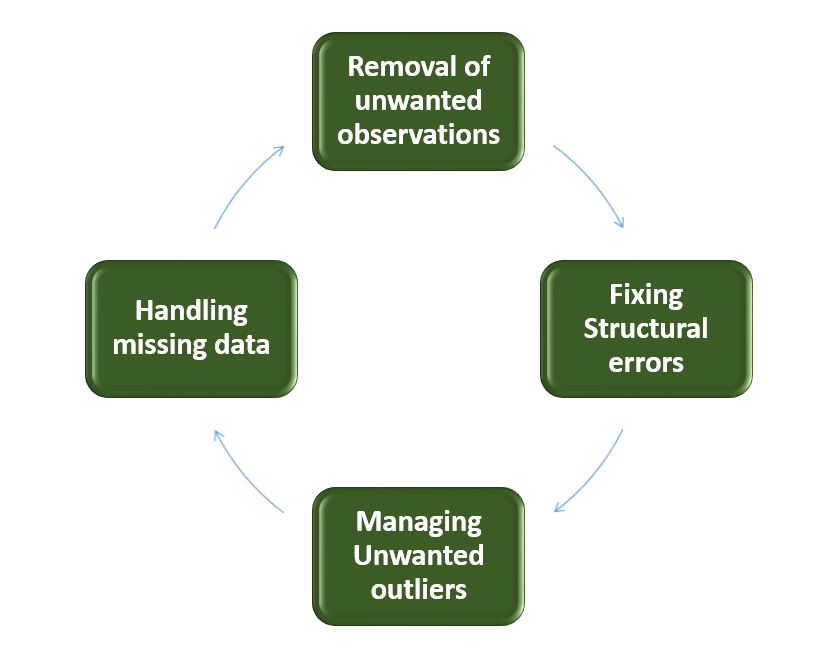

4. Identify Data Errors and Fix them

Identifying and fixing data errors is a fundamental aspect of data cleaning. Data errors can include incorrect values, missing data, and outliers that deviate significantly from the expected patterns. Implement validation rules and error-checking algorithms to detect these anomalies. Once identified, take corrective actions such as updating incorrect values, imputing or removing missing data, and addressing outliers appropriately. Fixing these errors ensures that your data is accurate and reliable, providing a solid foundation for robust analysis and decision-making.

5. Focus on Standardization of your Data Sets

Standardizing your data involves ensuring uniformity in formats and conventions across your data sets. This can include standardizing date formats, numerical units, and categorical values. Standardization simplifies data integration from multiple sources, making it easier to compare and merge data sets. It also reduces errors and inconsistencies that can arise from disparate data formats. By focusing on standardization, you ensure that your data is consistent and interpretable, facilitating smoother analysis and reporting.

6. Cross-Check your Data

Cross-checking your data is crucial for verifying its accuracy. This process involves comparing your data against trusted sources or additional data sets to identify discrepancies. Cross-checking helps uncover errors that may not be immediately apparent, such as incorrect data entries or outdated information. This step can involve using automated tools to match data points or manual verification against reliable sources. Ensuring the accuracy of your data through cross-checking enhances its credibility and the reliability of the subsequent analysis.

Summing Up

Investing time in data cleansing upfront prevents future issues, enhances operational efficiency, and supports regulatory compliance. While it may be time-consuming, the benefits far outweigh the effort, resulting in better strategic decisions and a competitive edge.However, the way you carry out the cleaning process makes a big difference. Some companies hire data cleansing professionals, while others outsource data cleansing to keep within their budget, resources, and convenience. Both models have their advantages, and which one will fit you depends on your requirements. However, prioritizing data cleaning guarantees meaningful insights and drives business success. Remember, “garbage in, garbage out”—ensure your data is clean to achieve the best outcomes.